July 5, 2024

A new study by Google researchers, though not yet peer-reviewed, paints a concerning picture of generative AI. Generative AI, once hailed as a revolution in content creation, might be turning into a nightmare for online authenticity.

The study highlights the misuse of generative AI, which is creating a surge of fake content online. This fake content includes images, videos, and possibly even manipulated evidence. The lines between real and fabricated content are becoming increasingly blurred.

The researchers found that a significant portion of generative AI users are leveraging this technology for malicious purposes. These purposes include swaying public opinion, enabling scams, and even generating profit.

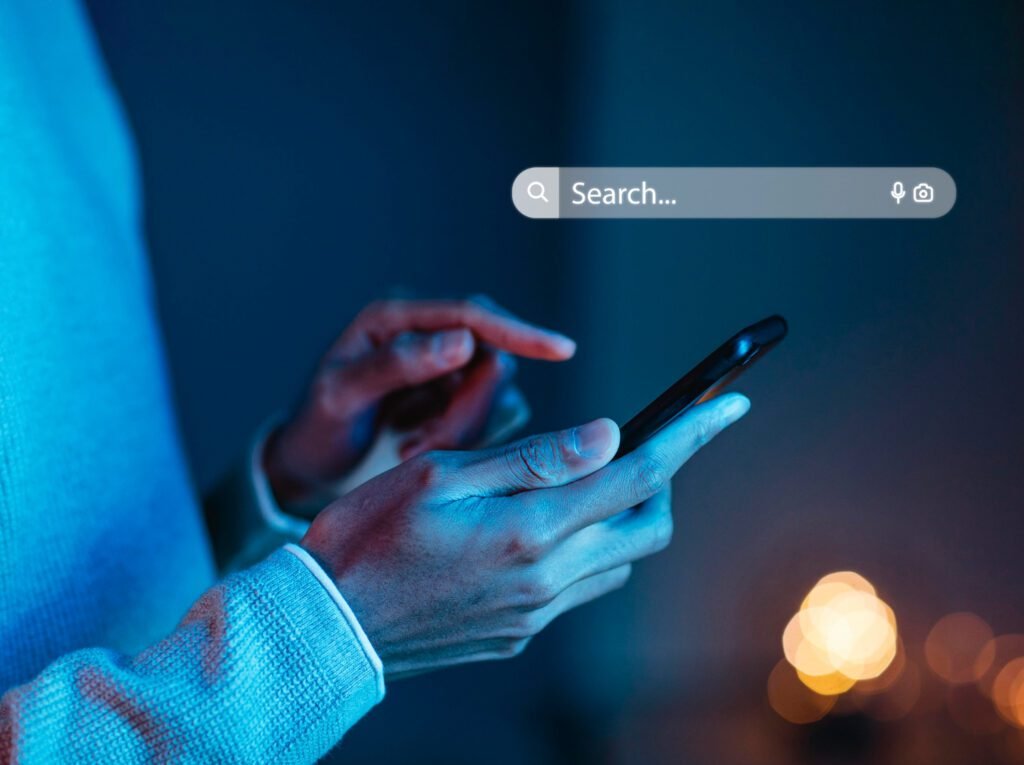

What’s particularly worrisome is the ease of access. Generative AI systems are becoming increasingly sophisticated, yet require minimal technical expertise to operate.

This accessibility is making it easier for bad actors to spread misinformation and manipulate online discourse.

The study doesn’t shy away from calling out Google itself. While Google is a major player in developing generative AI, the researchers point out that the company hasn’t exactly been a shining example of responsible use.

The easy availability of generative AI tools, coupled with Google’s own missteps, is creating a perfect storm. People’s ability to discern real from fake online is being severely challenged.

This constant bombardment of inauthentic content could lead to a general distrust of all online information.

The researchers also warn of a troubling trend: high-profile individuals potentially using AI-generated content to dismiss negative evidence. This tactic could significantly hinder investigations and erode public trust.

The future of online information seems bleak based on this study. Generative AI, a powerful tool, is being heavily misused, and the ability to identify truth online is under siege.

@2023-2024-All Rights Reserved-JustAiTrends.com